Fiddler is a great web debugger for web developers of any platform. ELMAH is great for error logging in ASP.NET apps. Both are practically must-have tools for any ASP.NET developer. So how about combining them to debug ASP.NET errors more easily?

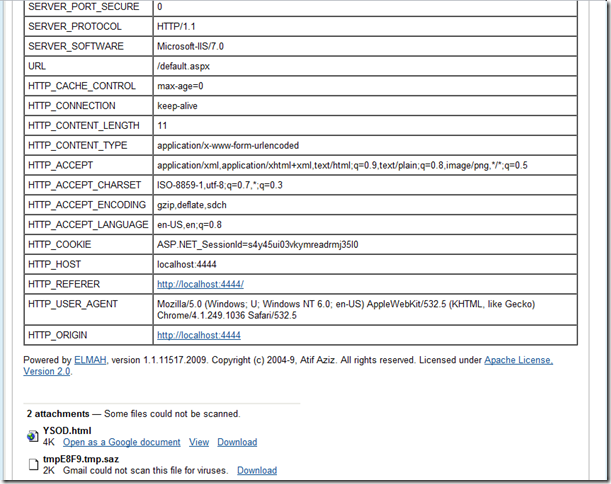

Here's a module that sends a SAZ file attached to all ELMAH mails. If you're not familiar with Fiddler, SAZ stands for Session Archive Zip, it's basically a ZIP file containing raw HTTP request/responses. After installing this module, a sample ELMAH mail might look like this:

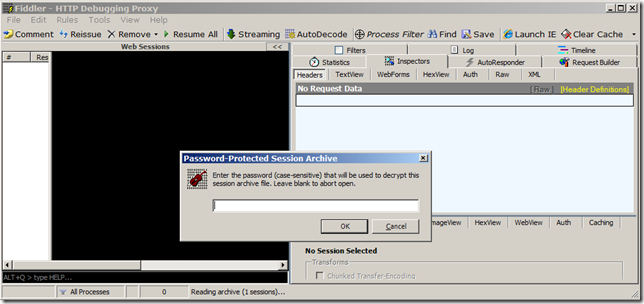

See the last attachment? It's our SAZ file, click on it to open it with Fiddler:

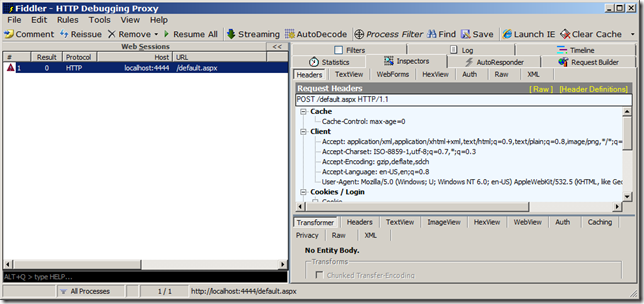

The SAZ is password-protected since the HTTP form might have sensitive information. Enter the password and you can see the request:

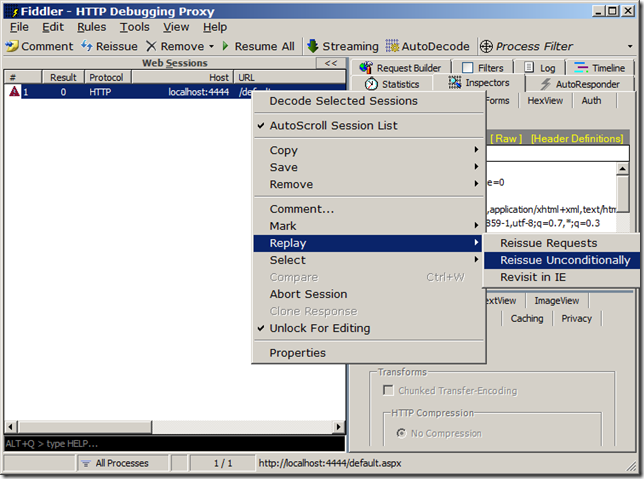

Now you can edit the request from Fiddler, change the host to your local instance of the website and then replay the request to reproduce the error:

Configuration is very easy: just register the ElmahMailSAZModule after ELMAH's ErrorMailModule. You can optionally supply a configuration, e.g.:

<configuration> <configSections> <sectionGroup name="elmah"> <section name="errorMail" requirePermission="false" type="Elmah.ErrorMailSectionHandler, Elmah"/> <section name="errorMailSAZ" requirePermission="false" type="ElmahFiddler.ElmahMailSAZModule, ElmahFiddler"/> </sectionGroup> </configSections> <elmah> <errorMailSAZ> <password>bla</password> <exclude> <url>default</url> <url>blabla</url> </exclude> </errorMailSAZ> <errorMail from="pepe@gmail.com" to="pepe@gmail.com" subject="ERROR From Elmah:" async="false" smtpPort="587" useSsl="true" smtpServer="smtp.gmail.com" userName="pepe@gmail.com" password="pepe" /> </elmah> ...

This will apply the password "bla" to the SAZ files, and NOT create any SAZ for any requests that match (regex) "default" or "blabla". The latter is useful to prevent potentially huge SAZ files coming from requests with file uploads.

Caveats:

- Requires async="false" on the mail module, since it needs access to the current HttpContext.

- Does not include the HTTP response. This could be implemented using Response.Filter, but I'm not sure it's worth it.

I'm also playing with the idea of keeping a trace of all the requests in a user session, in order to reproduce more complex scenarios (SAZ files can accomodate multiple requests). This would place a considerable load on the server though, and the resulting SAZ file could get quite big.

Source code is here. It's a VS2010 / .NET 4.0 solution.

Kudos to Eric Lawrence for recently implementing SAZ support in FiddlerCore, without it this wouldn't be possible.